In the sleek, unrelenting world of Silicon Valley, where disruption is a mantra and youth is both a currency and a burden, Suchir Balaji stood out as someone who questioned the very foundations of the empire he helped build. At just 26, he was a researcher who worked with OpenAI, one of the most influential AI companies on the planet. And yet, instead of riding the wave of AI euphoria, he chose to speak out against it—voicing concerns that the systems he helped create, particularly ChatGPT, were fundamentally flawed, ethically dubious, and legally questionable.

His tragic death in December 2024 shocked the tech world. But it also forced many to confront the uncomfortable truths he had been raising all along.

Just a Kid Who Dared to Question Giants

Balaji wasn’t your archetypal Silicon Valley visionary. He wasn’t a grizzled founder with a decade of battle scars or a loud-mouthed tech bro proclaiming himself the savior of humanity. He was just a kid, albeit a remarkably sharp one, who started working at OpenAI in 2020, fresh out of the University of California, Berkeley.

Like many others in his field, he had been captivated by the promise of artificial intelligence: the dream that neural networks could solve humanity’s greatest problems, from curing diseases to tackling climate change. For Balaji, AI wasn’t just code—it was a kind of alchemy, a tool to turn imagination into reality.

And yet, by 2024, that dream had curdled into something darker. What Balaji saw in OpenAI—and in ChatGPT, its most famous product—was a machine that, instead of helping humanity, was exploiting it.

ChatGPT: A Disruptor or a Thief?

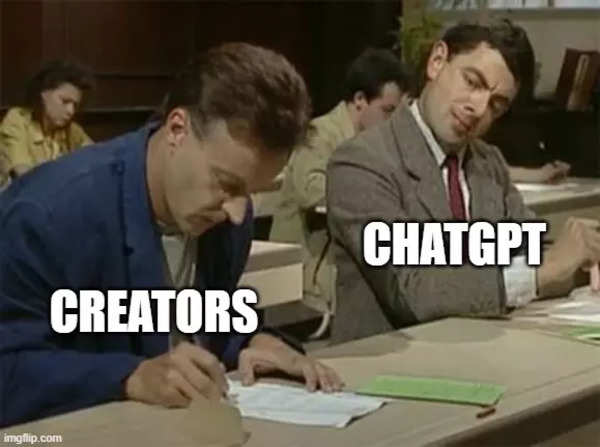

ChatGPT was—and still is—a marvel of modern technology. It can whip up poems, solve coding problems, and explain quantum physics in seconds. But behind its charm lies a deep, controversial truth: ChatGPT, like all generative AI models, was built by feeding it mountains of data scraped from the internet—data that includes copyrighted content.

Balaji’s critique of ChatGPT was simple: it was too dependent on the labor of others. He argued that OpenAI had trained its models on copyrighted material without permission, violating the intellectual property rights of countless creators, from programmers to journalists.

The process of training ChatGPT works like this:

Step 1: Input the Data – OpenAI collected massive amounts of text from the internet, including blogs, news articles, programming forums, and books. Some of this data was publicly available, but much of it was copyrighted.

Step 2: Train the Model – The AI analyzed this data to learn how to generate human-like text.

Step 3: Generate the Output – When you ask ChatGPT a question, it doesn’t spit out exact copies of the text it was trained on, but its responses often draw heavily from the patterns and information in the original data.

Here’s the problem, as Balaji saw it: the AI might not directly copy its training data, but it still relies on it in ways that make it a competitor to the original creators. For example, if you asked ChatGPT a programming question, it might generate an answer similar to one you’d find on Stack Overflow. The result? People stop visiting Stack Overflow, and the creators who share their expertise there lose traffic, influence, and income.

The Lawsuit That Could Change AI Forever

Balaji wasn’t alone in his concerns. In late 2023, The New York Times filed a lawsuit against OpenAI and its partner Microsoft, accusing them of illegally using millions of articles to train their models. The Times argued that this unauthorized use directly harmed their business:

Content Mimicry: ChatGPT could generate summaries or rewordings of Times articles, effectively competing with the original pieces.

Market Impact: By generating content similar to what news organizations produce, AI systems threaten to replace traditional journalism.

The lawsuit also raised questions about the ethics of using copyrighted material to create tools that compete with the very sources they rely on. Microsoft and OpenAI defended their practices, arguing that their use of data falls under the legal doctrine of “fair use.” This argument hinges on the idea that the data was “transformed” into something new and that ChatGPT doesn’t directly replicate copyrighted works. But critics, including Balaji, believed this justification was tenuous at best.

What Critics Say About Generative AI

Balaji’s criticisms fit into a larger narrative of skepticism around large language models (LLMs) like ChatGPT. Here are the most common critiques:

Copyright Infringement: AI models scrape copyrighted content without permission, undermining the rights of creators.

Market Harm: By providing free, AI-generated alternatives, these systems devalue the original works they draw from—be it news articles, programming tutorials, or creative writing.

Misinformation: Generative AI often produces “hallucinations”—fabricated information presented as fact—undermining trust in AI-generated content.

Opacity: AI companies rarely disclose what data their models are trained on, making it hard to assess the full scope of potential copyright violations.

Impact on Creativity: As AI models mimic human creativity, they may crowd out original creators, leaving the internet awash in regurgitated, derivative content.

Balaji’s Vision: A Call for Accountability

What set Balaji apart wasn’t just his critique of AI—it was the clarity and conviction with which he presented his case. He believed that the unchecked growth of generative AI posed immediate dangers, not hypothetical ones. As more people relied on AI tools like ChatGPT, the platforms and creators that fueled the internet’s knowledge economy were being pushed aside.

Balaji also argued that the legal framework governing AI was hopelessly outdated. U.S. copyright law, written long before the rise of AI, doesn’t adequately address questions about data scraping, fair use, and market harm. He called for new regulations that would ensure creators are fairly compensated for their contributions while still allowing AI innovation to flourish.

A Legacy of Questions, Not Answers

Suchir Balaji wasn’t a tech titan or a revolutionary visionary. He was just a young researcher grappling with the implications of his work. In speaking out against OpenAI, he forced his peers—and the world—to confront the ethical dilemmas at the heart of generative AI. His death is a reminder that the pressures of innovation, ambition, and responsibility can weigh heavily, even on the brightest minds. But his critique of AI lives on, raising a fundamental question: as we build smarter machines, are we being fair to the humans who make their existence possible?